Intel Announces Cascade Lake: Up to 56 Cores and Optane Persistent Memory DIMMs

Cascade Lake and Friends

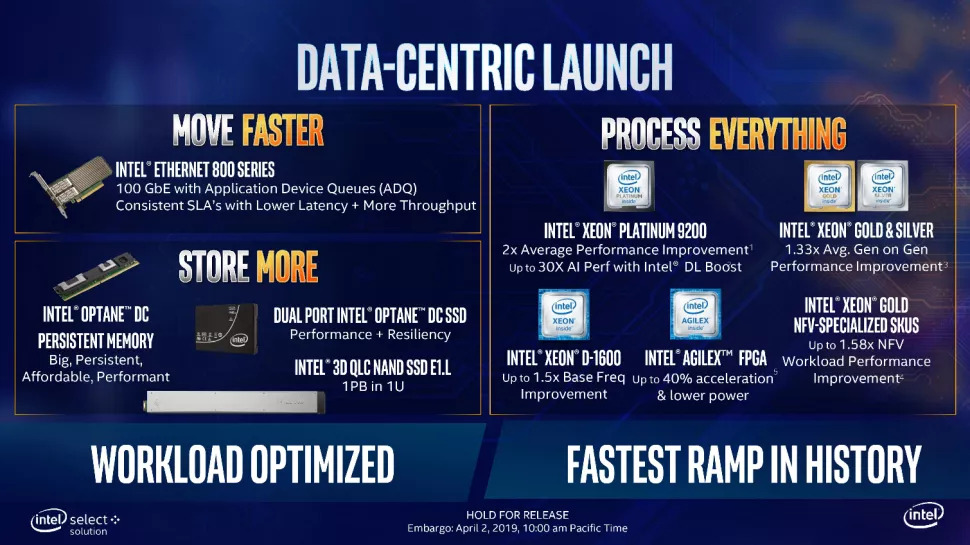

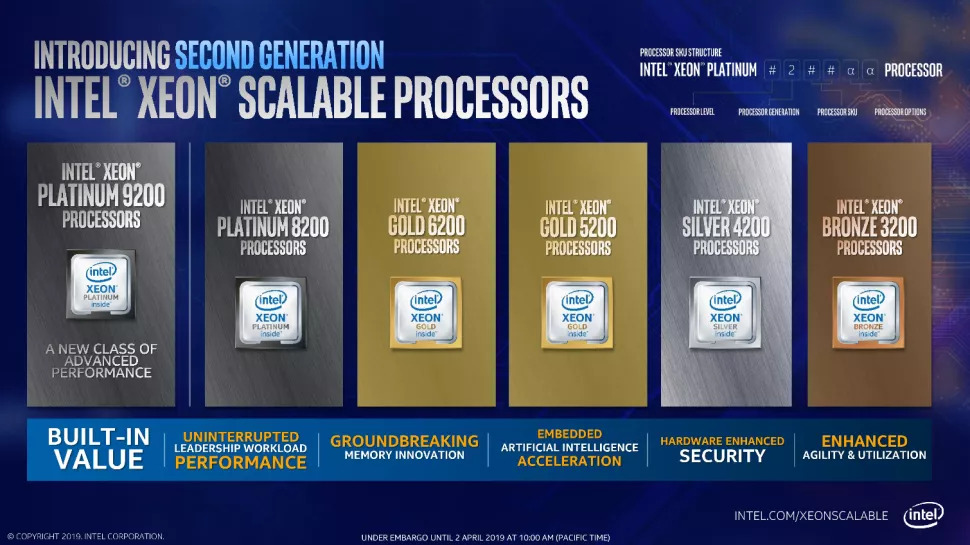

Intel announced its Cascade Lake line of Xeon Scalable data center processors at its Data-Centric Innovation Day here in San Francisco. The second-generation lineup of Xeon Scalable processors comes in 53 flavors that span up to 56 cores and 12 memory channels per chip, but as a reminder that Intel company is briskly expanding beyond “just” processors, the company also announced the final arrival of its Optane DC Persistent Memory DIMMs along with a range of new data center SSDs, Ethernet controllers, 10nm Agilex FPGAs, and Xeon D processors.

This broad spectrum of products leverages Intel’s overwhelming presence in the data center (it currently occupies ~95% of the worlds server sockets), as a springboard to chew into other markets, including its new assault on the memory space with the Optane DC Persistent Memory DIMMs. The long-awaited DIMMs open a new market for Intel and have the potential to disrupt the entire memory hierarchy, but also serve as a potentially key component that can help the company fend off AMD’s coming 7nm EPYC Rome processors.

Intel designed the new suite of products to address data storage, movement, and processing from the edge to the data center, hence its new Move, Store, Process mantra that encapsulates its end-to-end strategy. We’re working on our full review of the Xeon Scalable processors, but in the meantime, let’s take a closer look at a few of Intel’s announcements.

56 Cores, 112 Threads, and a Whole Lotta TDP

AMD has already made some headway with its existing EPYC data center processors, but the company’s forthcoming 7m Rome processors pose an even bigger threat with up to 64 cores and 128 threads packed into a single chip, wielding a massive 128 cores and 256 threads in a single dual-socket server. The increased performance, and reduced power consumption, purportedly outweighs Intel’s existing lineup of Xeon processors, so Intel turned to a new line of Cascade Lake-AP processors to shore up its defenses in the high core-count space. These new processors slot in as a new upper tier of Intel’s Xeon Platinum lineup.

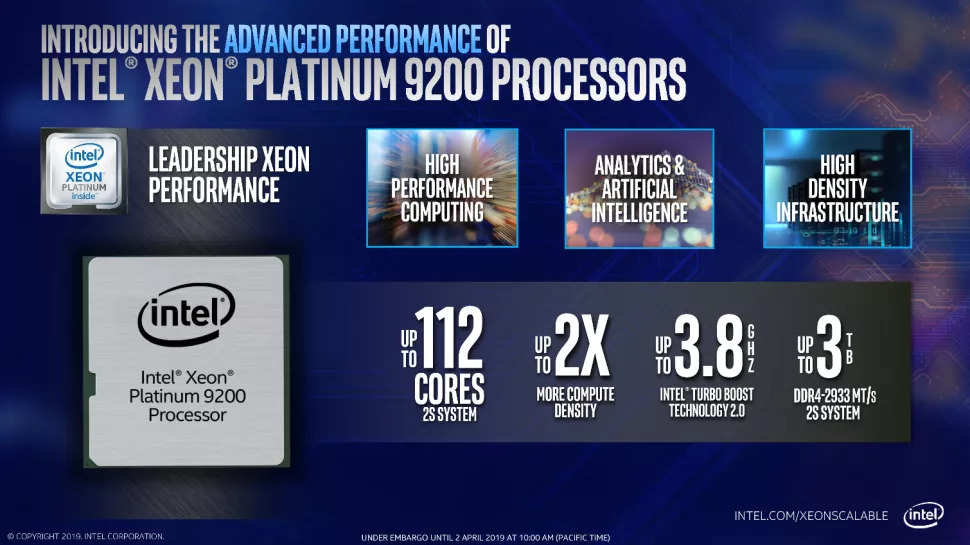

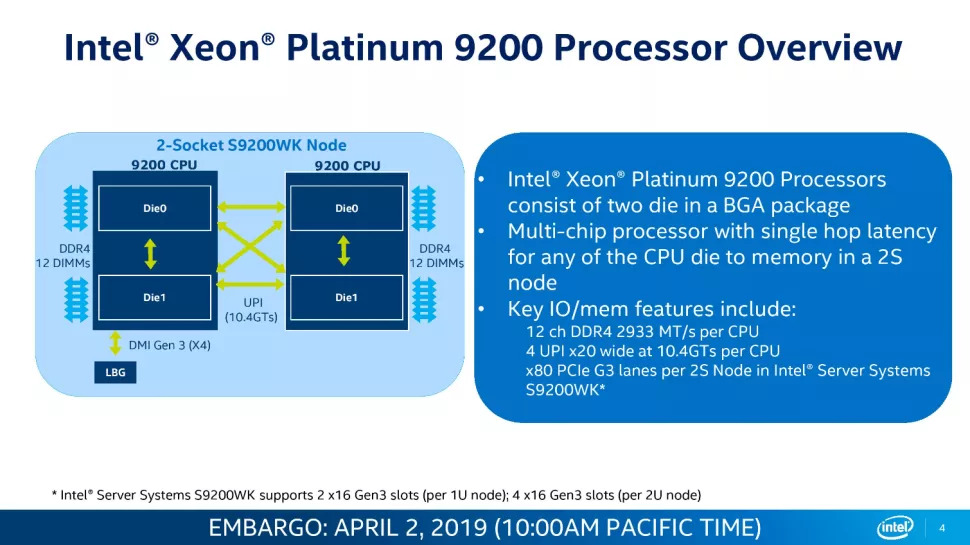

These new 9000-series chips come packing up to 56 cores and 112 threads in a dual-die MCM (Multi-Chip Module) design, meaning that two die come together to form a single chip. Intel claims the processors offer the highest-performance available for HPC, AI, and IAAS workloads. The processors also offer the most memory channels, and thus access to the highest memory bandwidth, of any data center processor. Performance density, high memory capacity, and blistering memory throughput are the goal here, which plays well to the HPC crowd. This approach signifies Intel’s embrace of a multi-chip design, much like AMD’s EPYC processors, for its highest core-count models.

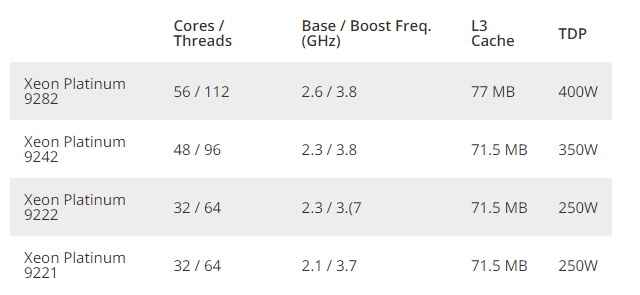

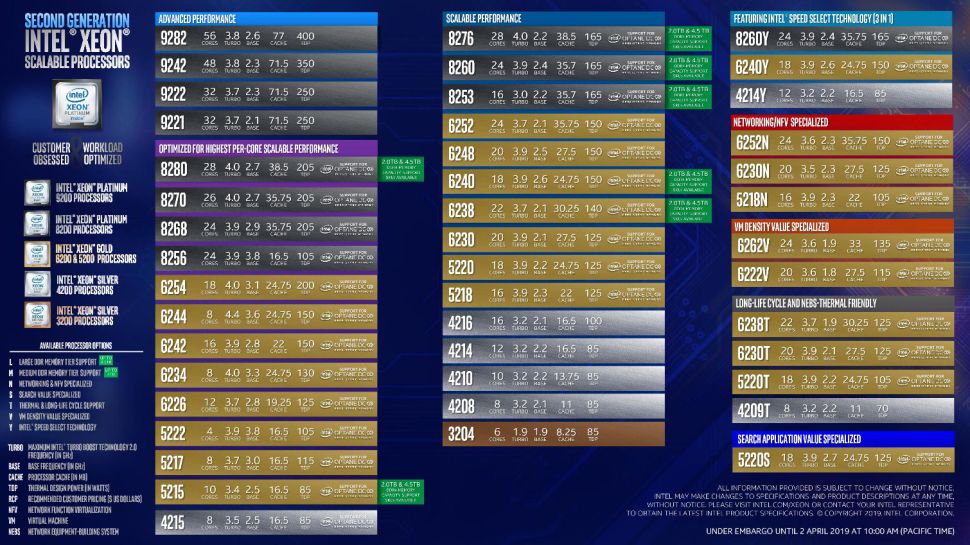

The 9200-series comes in three flavors with 56-, 48-, and 32-core models on offer. Clock speeds top out with the 56-core Xeon Platinum 9282 model with a 3.8 GHz boost, and base speeds weigh in at an impressive 2.6 GHz. The flagship Xeon Platinum 9282 also comes equipped with 77MB of L3 cache.

Each processor has two internal die that consists of modified 28-core XCC (extreme core count) die, and each die wields a six-channel memory controller. Together, this gives the processor access to 12 channels of DDR4-2933 memory, providing up to 24 memory channels and 3TB of DDR4 memory in a two-socket server. That facilitates up to 407 GB/s of memory throughput for a two-socket server equipped with the 56-core models.

Intel still uses garden-variety thermal interface grease between the die and heatspreader, but the 9282 weighs in with a monstrous 400W TDP, while the 48-core models have a 350W TDP and the 32-core models slot in with a 250W rating. Intel says the 400W models require water cooling, while the 350W and 250W models can use traditional air cooling. Unlike the remainder of the Cascade Lake processors, these chips are not compatible with previous-generation sockets. Instead of being socketed processors, the 9200-series processors come in a BGA (Ball Grid Array) package that is soldered directly to the host motherboard via a 5903-ball interface.

The 9200-series chips also expose up to 40 PCIe 3.0 lanes per chip, for a total of 80 lanes in a dual socket server. Each die has 64 PCIe lanes at its disposal, but Intel carves off some of the lanes for UPI (Ultra-Path Interconnect) connection that tie together the two die inside the processor, while others are dedicated to communication between two chips in a two socket server. Overall, that provides four UPI pathways per socket with a total of 10.4 GT/s of throughput.

A dual-socket server presents itself as a quad-socket server to the host, meaning the four NUMA nodes appear as four distinct CPUs, but the dual-die topology poses latency challenges for access to ‘far’ memory banks. Intel says it has largely mitigated the problem with a single-hop routing scheme that provides 79ns of latency for near memory and 130ns for far memory access.

Intel has provided its partners with a reference platform design that crams up to four nodes, each containing two of the 9200-series processors, into a single 2U rack enclosure. Intel hasn’t announced pricing for the chips, largely because they will only be available inside OEM systems, but says they are shipping to customers now.

Cascade Lake Xeon Platinum, Gold and Silver

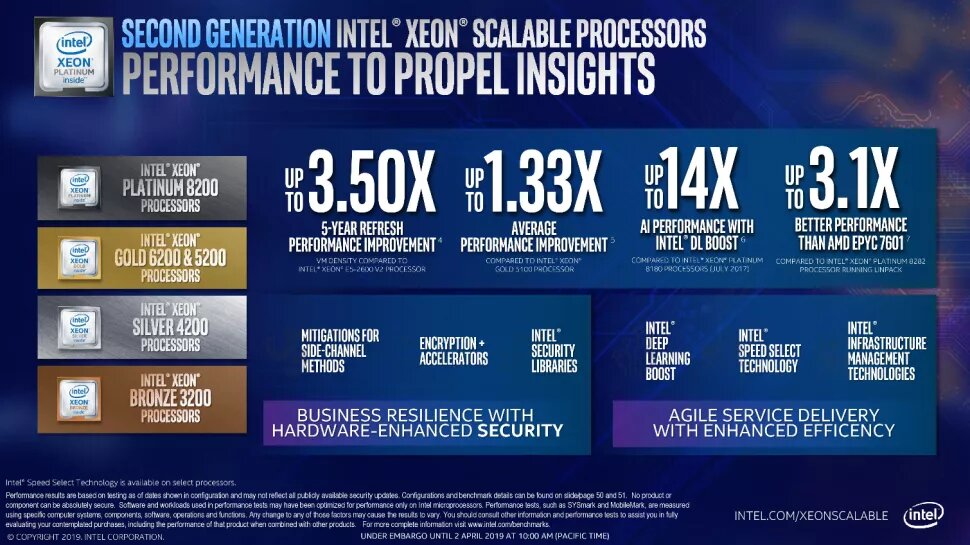

Intel’s new mainstream Xeon processors come as an iterative update to its Purley lineup and leverages the same Skylake microarchitecture as its predecessor, but Intel claims the new chips offer, on average, a 30% gen-on-gen performance boost in real-world workloads. Comparing Intel’s Cascade Lake to the previous-gen models, the company offers up to 25% more cores at the same price points.

Cascade Lake also represents Intel’s first data center processors with in-silicon mitigations for the notorious Meltdown and Spectre vulnerabilities. The existing patches result in reduced performance that varies based on workload, but newer mitigations baked directly into the processors should help reduce the impact.

Cascade Lake features many of the same fundamental design elements of the Xeon Scalable lineup, like a 28-core ceiling, up to 38.5 MB of L3 cache, the new UPI (Ultra Path Interface), up to six memory channels, AVX-512 support, and up to 48 PCIe lanes. These processors drop into the same socket as the existing generation of Purley chips.

Intel’s most notable advancements come on both the process and memory support front. Intel boosted memory support from DDR4-2666 to DDR4-2933 and doubled capacity up to 1.5TB of memory per chip. The company has moved forward to the 14nm++ process. Intel says the updated process allowed it to improve frequencies, power consumption, and institute targeted improvements to critical speed paths on the die.

Intel’s new DL Boost suite adds support for multiple new AI features, which the company claims makes it the only CPU specifically optimized for AI workloads. Overall, Intel claims these technologies provide a 14X performance increase in AI inference workloads. Intel also added support for new VNNI (Vector Neural Network Instructions) that optimize instructions for smaller data types commonly used in machine learning and inference. VNNI instructions fuse three instructions together to boost int8 (VPDPBUSD) performance and fuse two instructions to boost int16 (VPDPWSSD) performance. These AVX-512 instructions will still operate within the normal AVX-512 voltage/frequency curve during the operations.

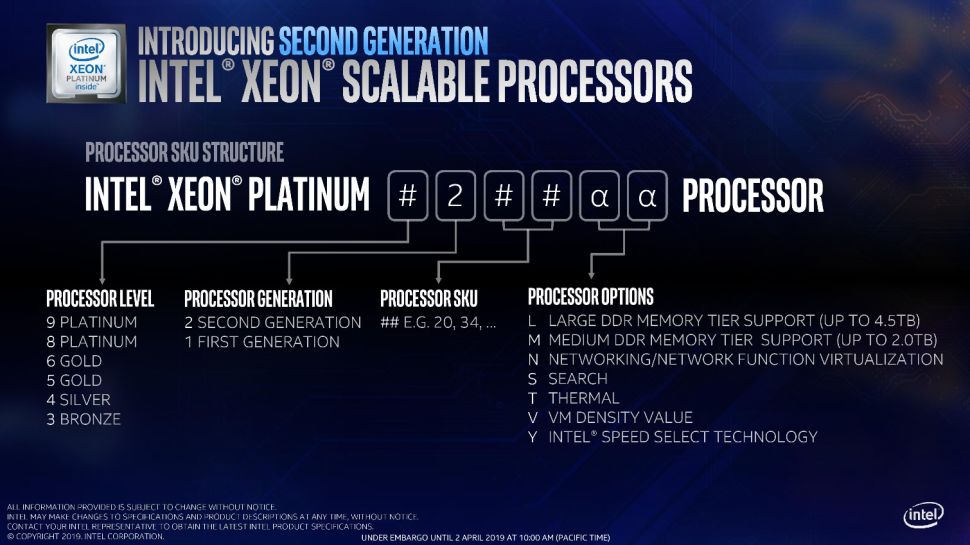

Intel’s Cascade Lake lineup has grown tremendously with the addition of several new types of processors designed for specific use-cases, and the company continues to segment its stack into Platinum, Gold, Silver, and Bronze categories. However, now the Cascade Lake-AP processors that span up to 56 cores slot in as the ultimate tier of performance for the Platinum category, while the standard 8200-series Platinum models, which top out at 28 cores, are drop-in compatible with existing Xeon Scalable servers with the LGA 3647 socket.

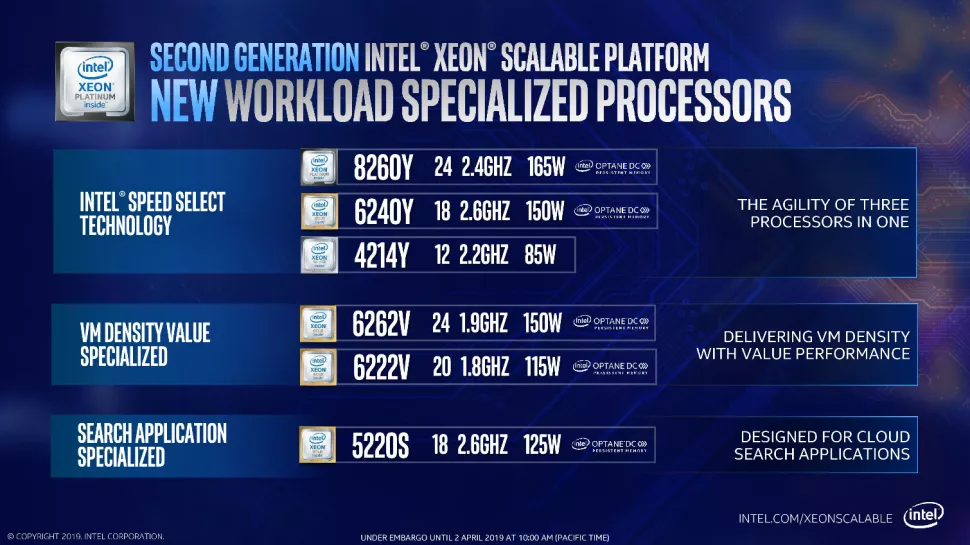

Intel also introduced new workload-specialized processors that have Intel’s Speed Select technology. These processors allow for finer-grained control of the processors’ frequency, such as assigning certain cores to work at a set frequency so that workloads targeted at certain cores (through affinity manipulations) experience a better overall quality of service, which boosts performance. Networking-optimized SKUs also forgo Turbo Boost entirely to eliminate sporadic bursts that introduce performance inconsistency.

Intel segments its product stack through several variables, including core count, base frequencies, PCIe connectivity, memory capacity/data rates, AVX-512 functionality, Hyper-Threading, UPI connections, and FMA units per core. Intel also doesn’t offer Optane Persistent DIMM support with some Bronze models. In other words, you pay every penny for every single feature you get.

You’ll notice that Intel hasn’t released pricing for the exotic 56-core models, but that’s because they only come in OEM systems. The flagship 8000-series processor, the Xeon Platinum 8280, weighs in at $10,009 per chip. The Platinum lineup dips down to $3,115 for the 8253 model, while the Gold family spans from $1,221 to $3,984 for the networking-optimized variant. Meanwhile, the Silver lineup spans from $417 to $1,002. As per usual, the 1K-unit tray pricing doesn’t represent what the largest companies will pay after volume discounts.

Optane DIMMs Have Bright Prospects

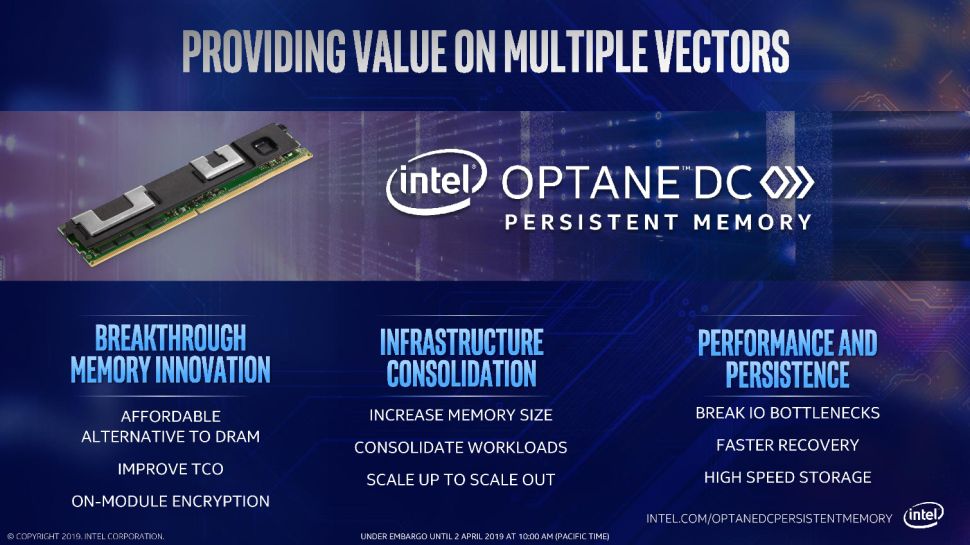

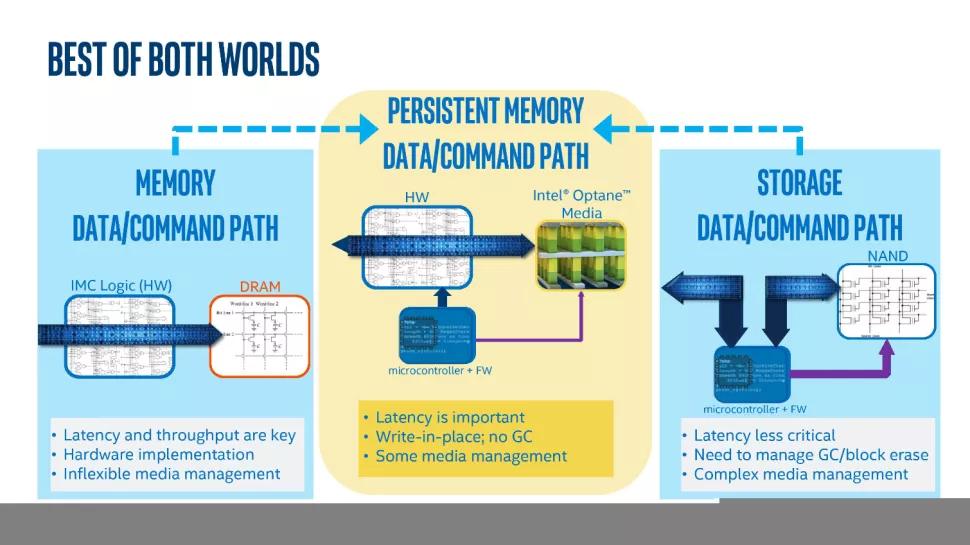

Intel also announced the long-awaited arrival of its new Optane DC Persistent Memory DIMMs. The new DIMMs slot into the DDR4 interface, just like a normal stick of RAM, but come in three capacities of 128, 256, and 512GB. That’s a massive capacity increase compared to the industry-leading 128GB DDR4 memory sticks and enables a total of up to 6.5TB of Optane storage in a dual-socket server. Intel designed the DIMMs to bridge both the performance and pricing gap between storage and memory, and although Intel hasn’t released pricing yet, the new DIMMs should land at much lower price points than typical DRAM.

The new modules use Intel’s 3D XPoint memory and can be addressed as either memory or storage. Unlike DRAM, 3D XPoint retains data after power is removed, thus enabling radical new use cases. 3D XPoint is also fast enough to serve as a slower tier of DRAM, but it does require tuning the application and driver stacks to accommodate its unique characteristics.

Much like NAND, 3D XPoint has a finite lifespan, but Intel says that it warranties the modules for an unlimited amount of use over the five-year warranty period. Intel says that it bases this endurance rating on the maximum possible throughput possible to the DIMMs for the duration of the five-years, putting to rest any endurance concerns. Intel also encrypts all data stored on the DIMMs to protect user data.

The DIMMs feature a controller, much like an SSD, that manages an abstraction layer. The DIMMs do consume more power than standard DRAM, so thermal throttling can become a concern in dense servers. To circumvent the issue, Intel offers a range of settings that span from 12W to 18W, at a quarter-watt granularity, to tune performance and thermal generation to the environment. Overall, the DIMMs consume about 3X the power of a standard 8/16GB DDR4 DIMM. Power consumption is a huge consideration in the data center, and gaining 32x the memory capacity in exchange for a 3x increase in power consumption is a dramatic improvement.

Intel designed a new memory controller to support the DIMMs. The DIMMs are physically and electrically compatible with the JEDEC standard DIMM slot but use an Intel-proprietary protocol to deal with the uneven latency that stems from writing data to persistent memory. Intel confirmed that the Optane DIMMs could share a memory channel with normal DIMM slots, but the host system requires at least a single stick of DRAM to operate.

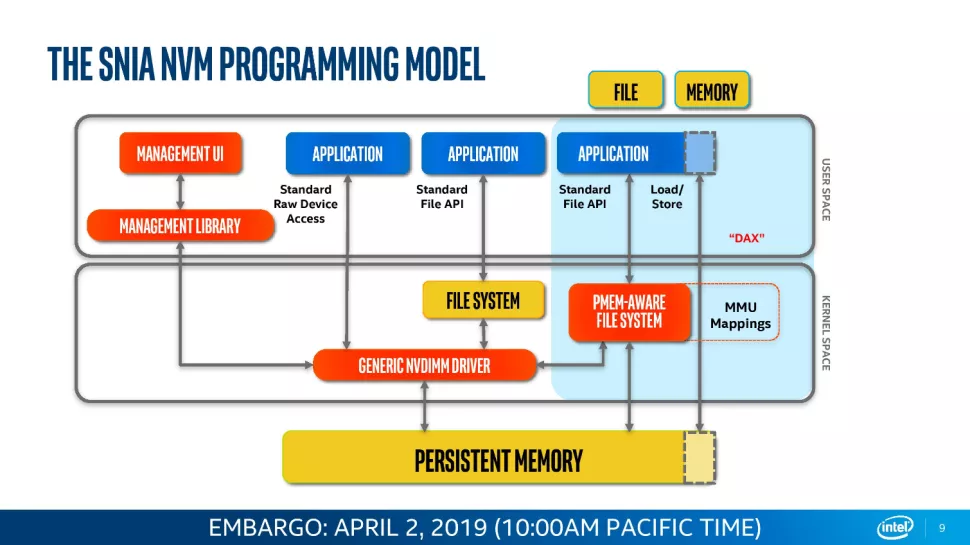

The new class of memory requires new programming models to unleash the full performance benefit, and application performance varies based upon workload. Intel has worked diligently to promote the ecosystem, and we’re working of a full review of the Optane DC Persistent Memory DIMMs. Stay tuned for deep-dive details.

Xeon D-1600, New SSDs, and 100Gb Ethernet

Xeon D-1600

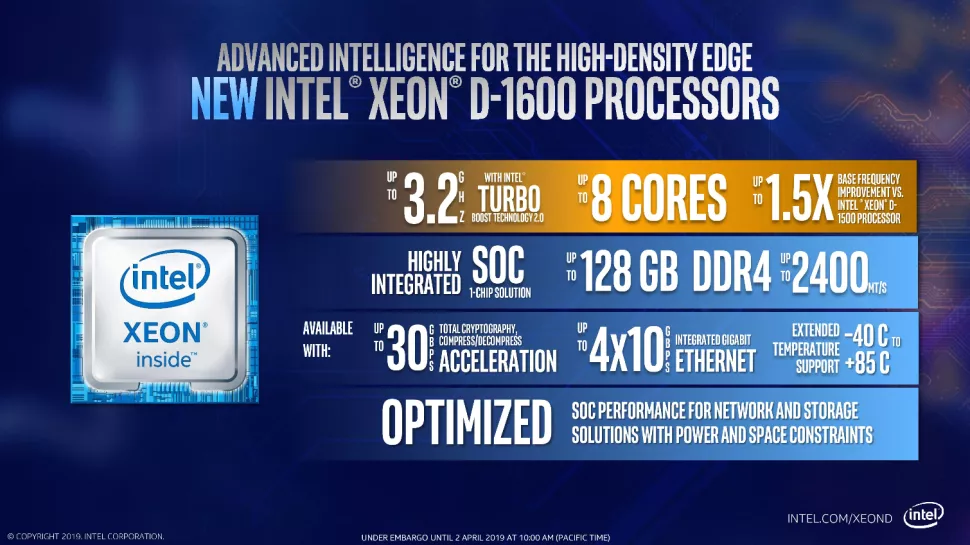

Intel’s Xeon D-1600 series slots in as the SoC (System On Chip) solution for power and space-constrained environments, which is critical for Intel’s 5G ambitions. These chips find their way into a range of devices, but edge processing for 5G networks is a specific target with this new generation of SoCs.

Xeon D-1600 pricing spans from $106 to $748, highlighting that this broad stack of Xeon D-1600 processors, which slot in under the throughput-optimized D-2100 series, addresses a plethora of different use-cases.

The Broadwell-based D-1600 lineup spans up to eight cores and supports up to 128GB of dual-channel DDR4 clocked at (up to) 2400 MT/s, while the D-2100 series spans from four cores to 16 and supports up to 512GB of memory.

Critically, the D-1600 SoCs also come equipped with (up to) an integrated quad-10GBe interface. The Xeon D-1600 processors span from a 27W TDP up to 65W, making them well-suited for a diverse number of power-constrained applications. Core counts span from two to eight cores, with clock speeds ranging from a 2.5GHz base frequency to a 3.2 GHz boost.

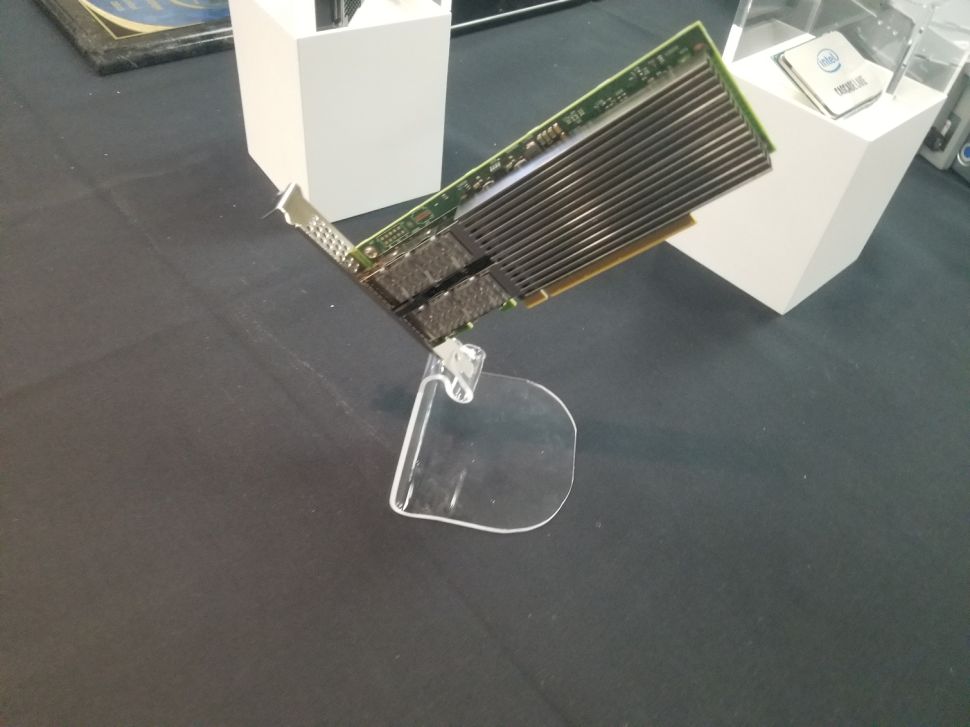

Intel Columbiaville 800 Series Ethernet Adapters

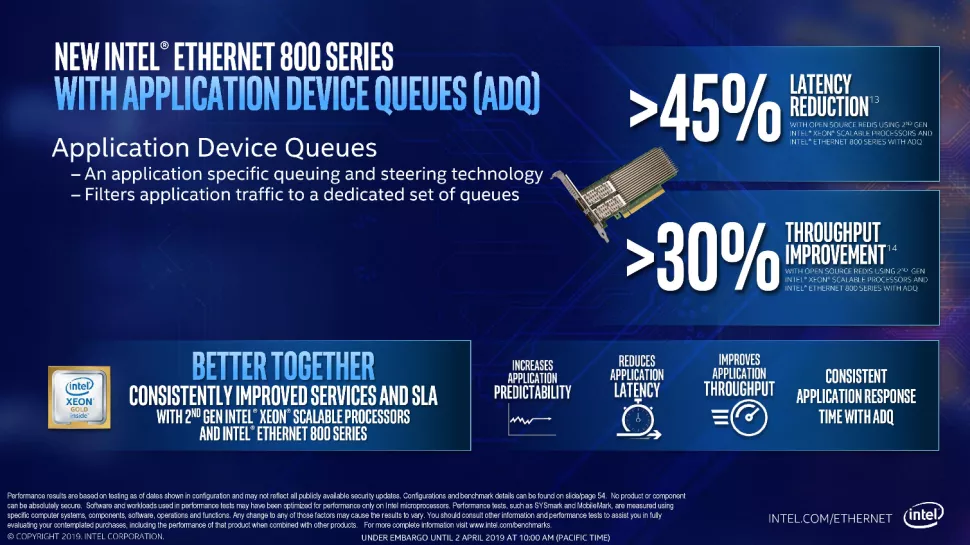

Intel’s Ethernet adapters don’t draw as many flashbulbs, but they serve as a key strategic asset for the company. Intel’s new 800 Series adapters offer up to 100Gbps port speeds and support the new ADQ (Application Device Queue) feature that partitions network traffic into dedicated swim-lanes to boost performance significantly. The adapters also support Dynamic Device Personalization (DPP) profiles that enable smart packet sorting to route data intelligently. These adapters also support RDMA via iWARP and RoCE v2.

Intel hasn’t released pricing information for the cards yet, which are sampling to customers, but expects them to launch in Q3 2019.

Intel Optane SSD DC D4800X and D5-P4326

Intel announced its new DC D4800X Optane SSDs, which provide dual port functionality that provides multiple pathways into the storage device. This feature provides redundancy, failover, and multi-pathing capabilities for mission-critical applications.

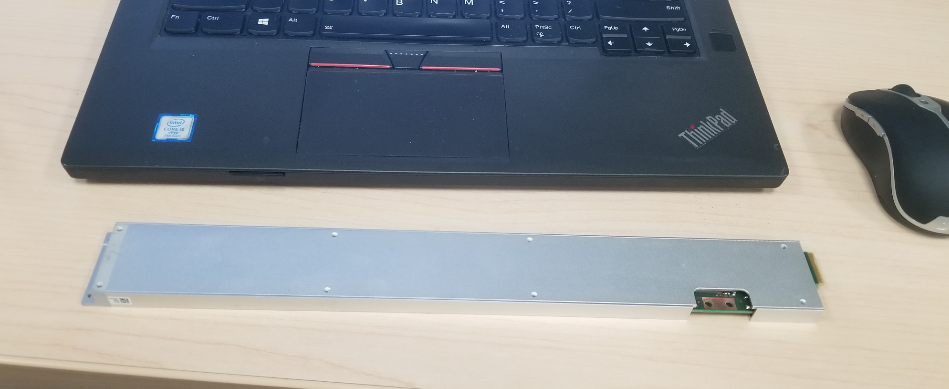

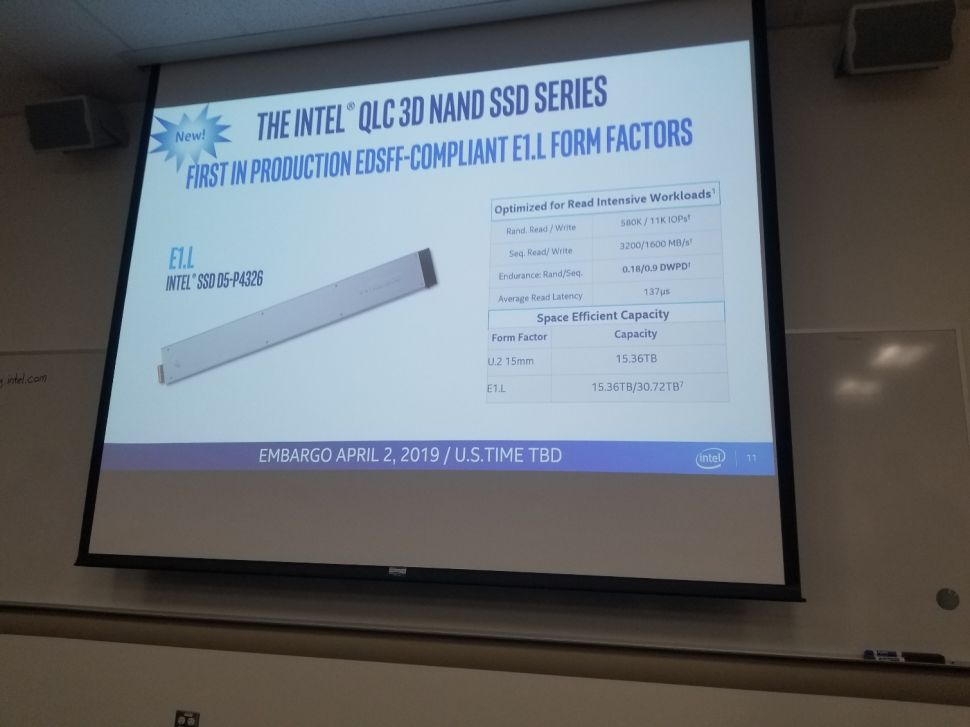

Intel also announced the D5-P4326 SSD. This new SSD adheres to the new standardized ESDF “ruler” form factor and boosts capacity by switching to QLC (Quad-Level Cell) flash. Even though we’ve seen Intel’s MLC variants of these drives demoed for years, these new models are the first for the general market.

The denser flash enables up to 30.72TB per ruler, and up to 1PB of storage per 1U server. Random read/write speeds weigh in at 580,000/11,000 IOPS, while sequential read/write performance lands at 3,200/1,600 MB/s. As expected, QLC flash results in reduced endurance of 0.9 DWPD (Drive Writes Per Day), but this should be sufficient for warm and cold data storage.

Intel hasn’t released performance or pricing specifics of its new dual port Optane SSDs, but we’ll update as more information becomes available.

Also, stay tuned for our deep-dive analysis of Intel’s new 10nm Agilex FPGAs.